👋 Goodbye low test coverage and slow QA cycles (Sponsored)Bugs sneak out when less than 80% of user flows are tested before shipping. However, getting that kind of coverage (and staying there) is hard and pricey for any team. QA Wolf’s AI-native solution provides high-volume, high-speed test coverage for web and mobile apps, reducing your organization’s QA cycle to minutes. They can get you:

The benefit? No more manual E2E testing. No more slow QA cycles. No more bugs reaching production. With QA Wolf, Drata’s team of engineers achieved 4x more test cases and 86% faster QA cycles. ⭐ Rated 4.8/5 on G2 This week’s system design refresher:

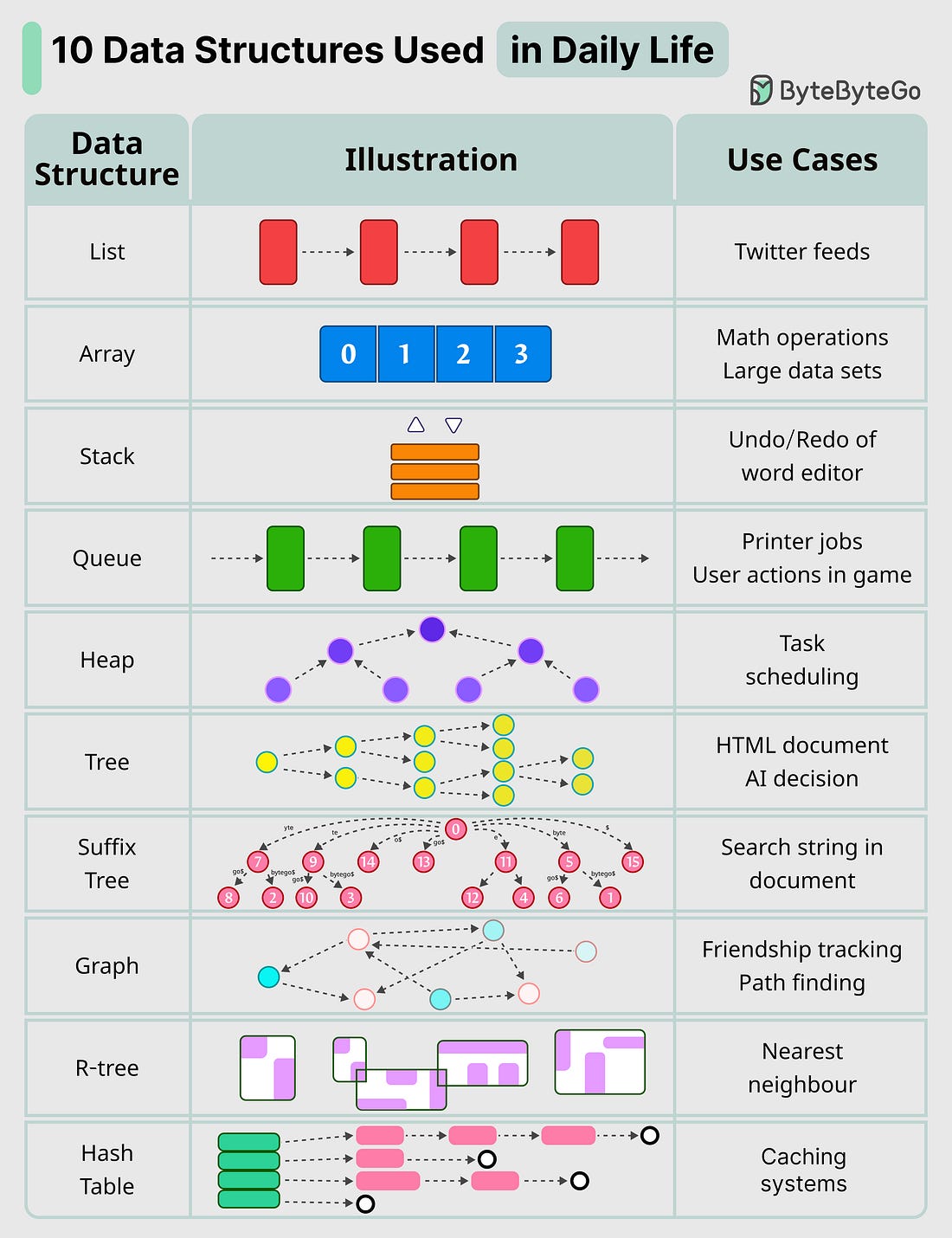

10 Key Data Structures We Use Every Day

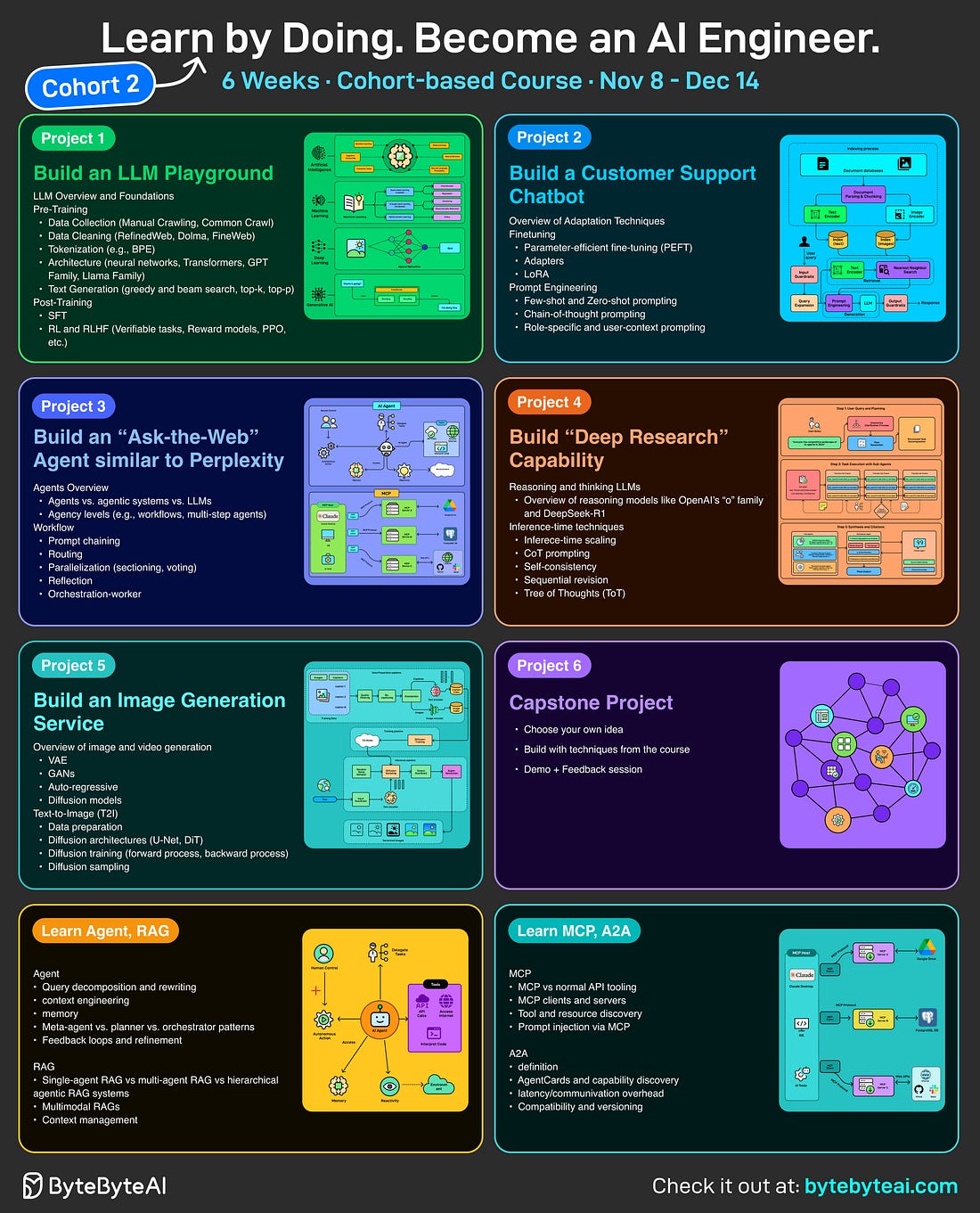

Over to you: Which additional data structures have we overlooked? 🚀 New Launch: Become an AI Engineer | Learn by Doing | Cohort 2!After the incredible success of our first cohort (almost 500 people attended), I’m thrilled to announce the launch of Cohort 2 of Become an AI Engineer! This is not just another course about AI frameworks and tools. Our goal is to help engineers build the foundation and end to end skill set needed to thrive as AI engineers. Here’s what makes this cohort special:

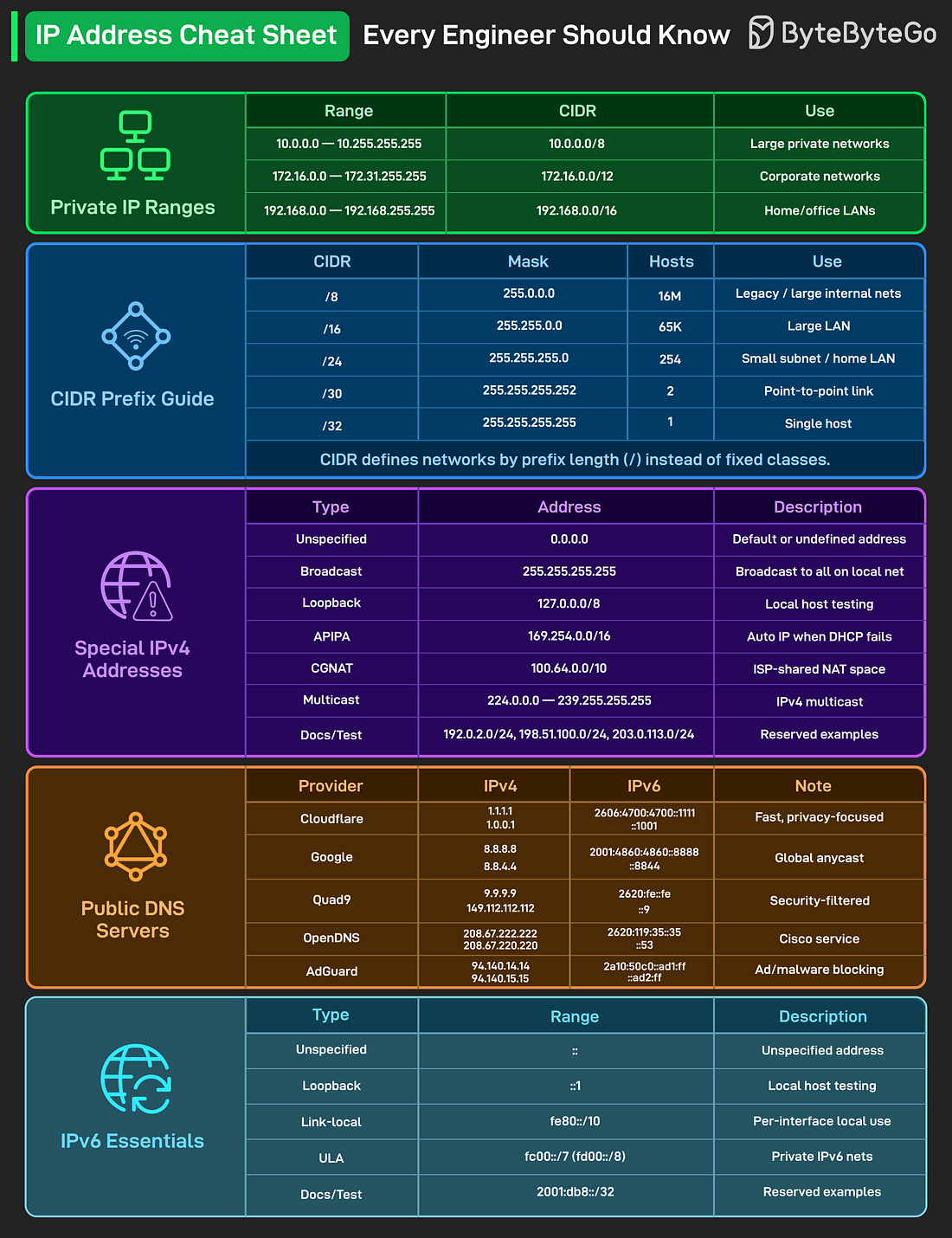

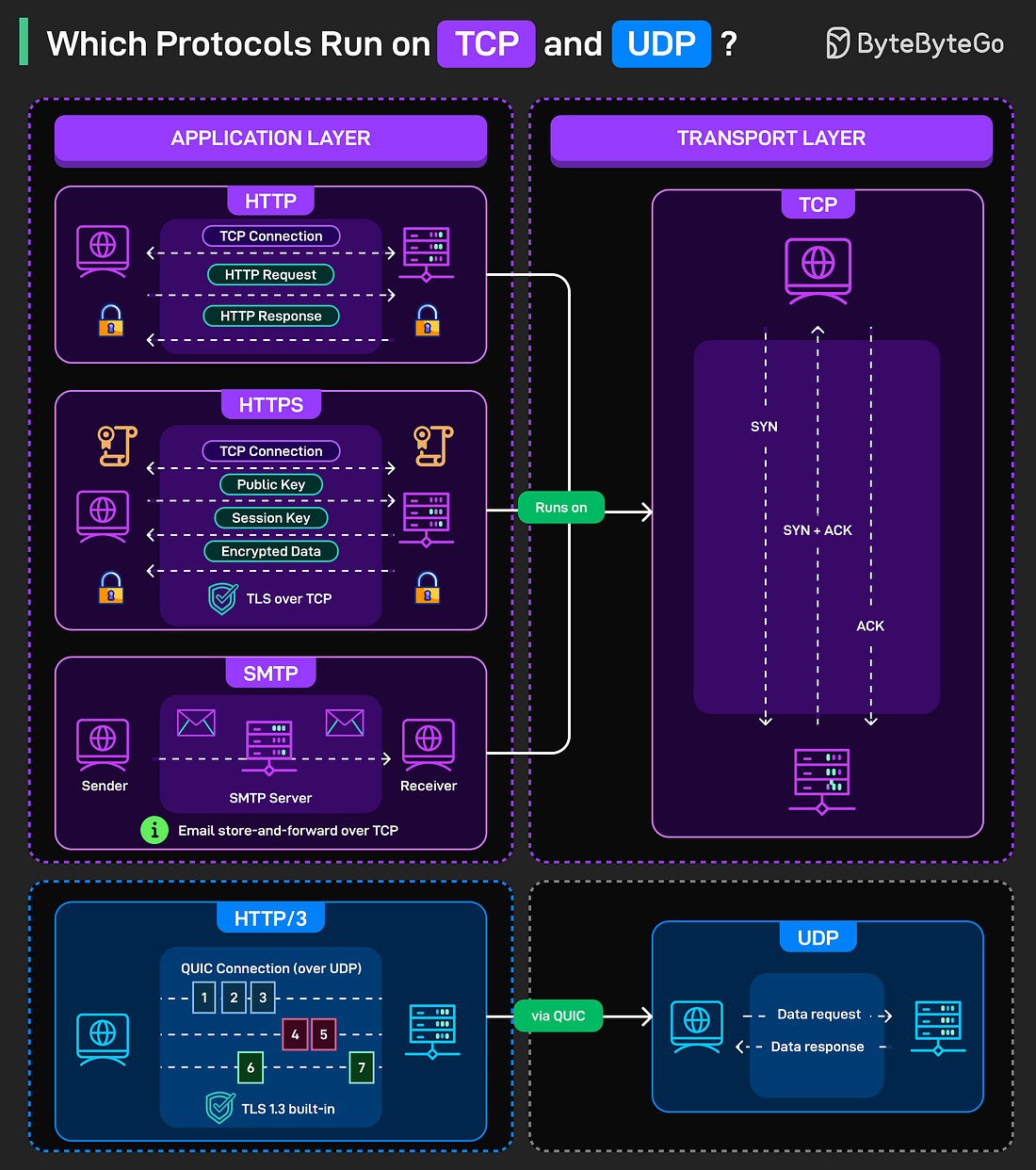

We are focused on skill building, not just theory or passive learning. Our goal is for every participant to walk away with a strong foundation for building AI systems. IP Address Cheat Sheet Every Engineer Should KnowWhich Protocols Run on TCP and UDPEvery message sent over the internet has two layers of communication, one that carries the data (transport) and one that defines what the data means (application). TCP and UDP sit at the transport layer, but they serve completely different purposes. TCP is connection-oriented. It guarantees delivery, maintains order, and handles retransmission when packets get lost.

UDP is connectionless. No handshake. No guaranteed delivery. No order preservation. Just fire data requests and responses into the network and hope they arrive. Sounds chaotic, but it’s fast.

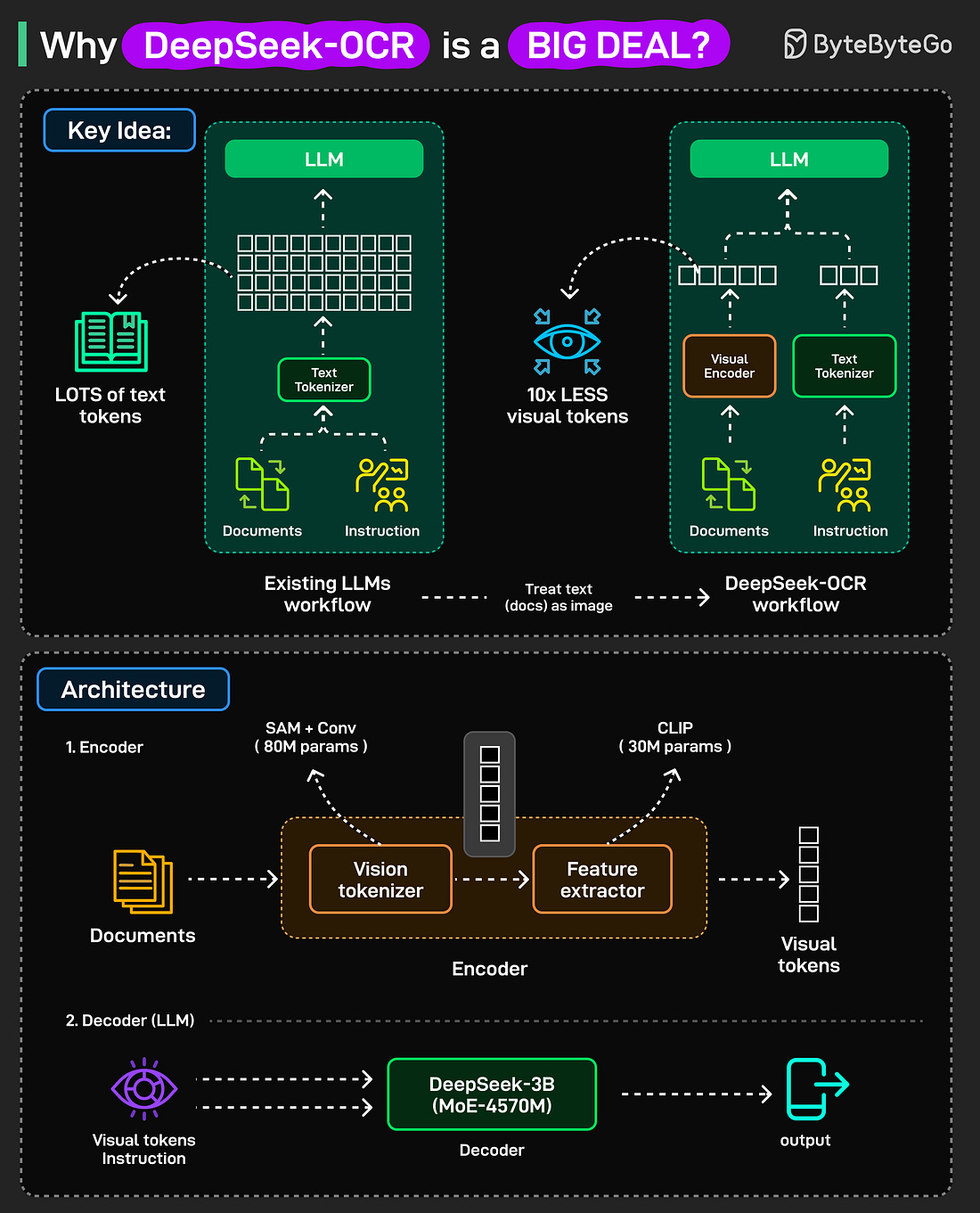

Over to you: What tools do you use to analyze transport layer performance? Why is DeepSeek-OCR such a BIG DEAL?Existing LLMs struggle with long inputs because they can only handle a fixed number of tokens, known as the context window, and attention cost grows quickly as inputs get longer. DeepSeek-OCR takes a new approach. Instead of sending long context directly to an LLM, it turns it into an image, compresses that image into visual tokens, and then passes those tokens to the LLM. Fewer tokens lead to lower computational cost from attention and a larger effective context window. This makes chatbots and document models more capable and efficient. How is DeepSeek-OCR built? The system has two main parts:

When to use it? DeepSeek-OCR shows that text can be efficiently compressed using visual representations. It is especially useful for handling very long documents that exceed standard context limits. You can use it for context compression, standard OCR tasks, or deep parsing, such as converting tables and complex layouts into text. Over to you: What do you think about using visual tokens to handle long-context problems in LLMs? Could this become the next standard for large models? SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. |

EP187: Why is DeepSeek-OCR such a BIG DEAL?

WE BRING YOU THE BEST!

01 November

Users_Online! 🟢

Comments

Search This Blog

Random Posts

Most Popular

Save thousands on your next car!

27 November

Companies are looking for Sales Representative

27 November

Phew! This is your problem now.

29 November

💬 Makathandeka Thah commented on a post

27 November

Welcome to Adzuna

27 November

Featured post

Extra 10% off Cyber Monday!

01 December

Ad Space

Created By SoraTemplates | Distributed By Blogger Templates

0 Comments

VHAVENDA IT SOLUTIONS AND SERVICES WOULD LIKE TO HEAR FROM YOU🫵🏼🫵🏼🫵🏼🫵🏼