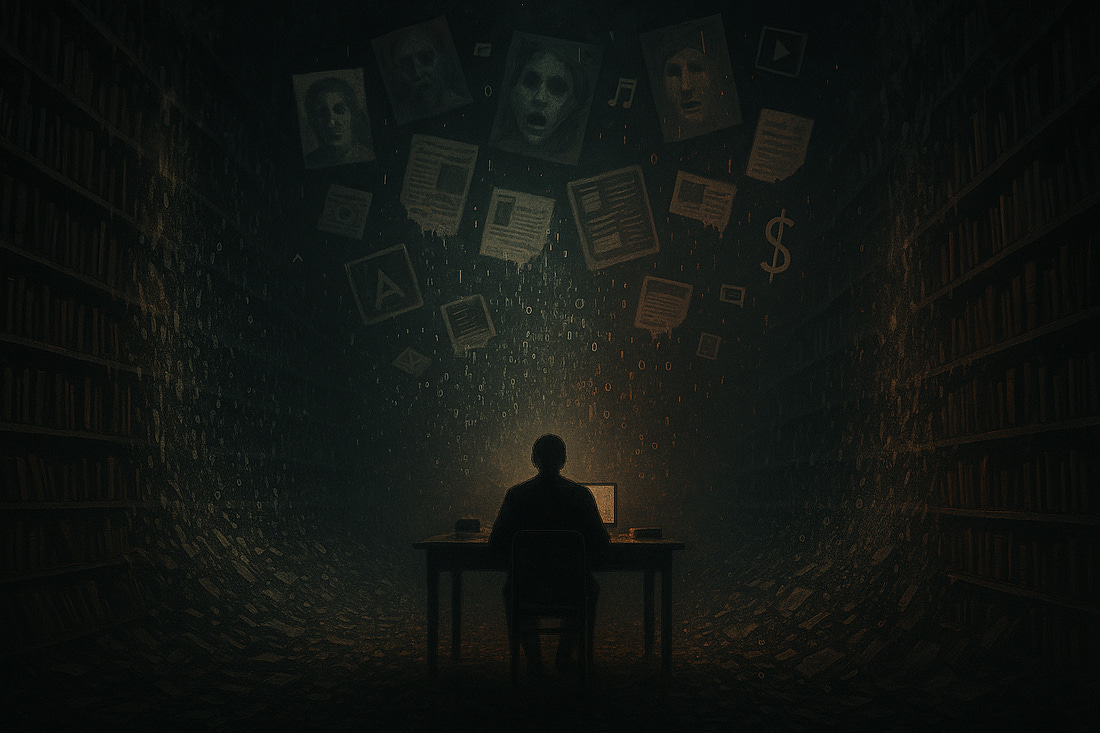

Box of Amazing: Commentary and curation on our technology-driven society. Did your amazing friend send you this email? You can sign up at boxofamazing.com - it’s free! Now accepting keynotes for Q3 2025 / Q4 2026As 2025 comes to a close and leaders everywhere prepare for a year of acceleration, I’m opening bookings for my keynote The SuperSkills Era: Thriving in the Age of AI. These 60-minute executive sessions draw on the research and frameworks from my upcoming book and focus on the human capabilities every leadership team will need as AI reshapes work. Designed for boards and senior teams, they can be delivered in person or virtually across the UK, Europe and globally. Slots are now open for Q3 2025 and Q4 2026 – a powerful way to reset thinking for the year ahead and future-proof your organisation. [Enquire here to book your session]. In addition to this newsletter, I recommend some other great ones. All free. Check them out here. Friends, Let me tell you a few things before I start down a semi-rant: AI slop is turning the web into a landfill. Journalism, science, markets, and memory are collapsing under synthetic noise. The decision is simple: restore human judgement as the filter, or watch the library burn. The machine hasn’t just arrived - it’s already the majority shareholder in human discourse. We hear these ad hoc stories in and amongst the noise. But put them all together and it’s clear that we built the Library of Alexandria and filled it with gibberish. You might call this the slop apocalypse. Or the Slopocalypse. Even the founder of the world wide web is worried. In February 2024, a peer-reviewed journal published a paper featuring a rat with grotesquely oversized genitals. No, I’m not going to link to it. The image was AI-generated nonsense. The fact it passed review wasn’t the scandal. The scandal was that nobody was surprised. This is where we are: the open web, humanity’s greatest library, has become a landfill. Machine-written articles, fake authors with AI faces, endless rivers of “content” engineered for algorithms rather than understanding. The Human CostLocal news deserts are being filled by entrepreneurs who buy abandoned newspaper domains and flood them with AI-generated “local news”; machines writing about events that never happened, in towns they’ve never processed beyond datasets. The reporters who once covered school boards and town councils for decades have been replaced by algorithms that confidently describe stores that closed years ago. Sports Illustrated crystallised the rot when it published articles under fake bylines like Drew Ortiz, a supposed outdoorsman whose AI-generated headshot smiled from a bio that never lived. The publishers kept going after being caught. BNN Breaking, a Hong Kong-based AI content farm, went further, using AI to falsely implicate an Irish broadcaster in sexual misconduct, triggering a defamation lawsuit. When machines write the news, reputation is collateral damage. It’s more dangerous than just slop. Healthcare faces escalating risks. Patients arrive at ERs clutching AI-generated treatment plans, some of which are dangerously wrong. Younger patients are more likely to trust them. Medical misinformation spreads through synthetic articles faster than doctors can debunk. If AI-written pseudo-science enters research databases, future diagnostic systems will inherit errors as facts. Be careful what you believe. AI helped me in the hospital to understand what was happening, but I only trusted the interpretation from a professional. The scale is crazier than I thought. Amazon now caps Kindle uploads at three books daily after drowning in AI novels, many fraudulently attributed to real authors like Jane Friedman. Wiley shut down 19 journals that had published AI-generated papers. And research shows significant portions of computer science papers now contain AI-generated text. The academic archive, which you might call the foundation of human knowledge, is being systematically corrupted. The very fact that this is rampant is a big problem. I wouldn’t have minded if it was just here or there, but it’s happening everywhere. The Economics of DecayIn Kenya, Vietnam, and the Philippines, content farms employ thousands to generate AI junk for Western audiences. A Facebook page posting fifty AI images daily can earn thousands of dollars – serious money in Nairobi or Manila. The more grotesque, the better: those “Shrimp Jesus” abominations, where Christ merges with crustaceans, pulled 20 million engagements. This is the slop subsidy in action: platforms pay for engagement, regardless of reality. What used to get views were Mentos in a Coke bottle, creating Coke champagne showers or mouthing to music videos or trying to drink a whole bottle of sprite without burping or the ice bucket challenge. Now anything goes. Meta knows what’s happening. Studies estimate that human activity has become the minority of internet traffic. Their response? Launch AI accounts in January 2025 to fill the feeds even more. Zuckerberg rolls out synthetic users knowing real ones are already outnumbered. OpenAI has watermarking technology but has not released it, reportedly fearing it would drive users away. That’s a choice of platform growth over transparency. They cashed in instead of doing the right thing. Meanwhile, inside companies, what Harvard Business Review calls ‘workslop’ has become epidemic. Employees report receiving AI-generated reports that look polished but mean nothing, with surveys suggesting hours of cleanup work per instance. Companies think AI saves money, but they’re paying a hidden slop tax of senior employee time correcting machine nonsense. McKinsey projected $2.6 to 4.4 trillion in potential value from generative AI across all use cases. Yet most companies report seeing little to no return on their AI investments. All this economic waste feeds into something far worse: the contamination of knowledge itself. The Recursion ApocalypseHere’s what should terrify you and what terrifies me: we have mathematically proven the heat death of knowledge, as Nature puts it. Not speculated but proven. When AI trains on AI-generated content, quality doesn’t decline. It collapses. I’m planning to live in this world a few more years, and I think I have some obligation to my daughters. What is this mess we are creating? The Nature study demonstrated this with brutal clarity. Text about medieval architecture, after nine AI generations, degraded into “a list of jackrabbits.” The language remained fluent. The meaning evaporated. Now imagine this happening to medical knowledge, legal precedent, scientific research. Some reports suggest that by 2026, we’ll exhaust fresh human training data. After that, it’s AI citing AI citing AI in a death spiral. The singularity we’re approaching isn’t super-intelligence. It might just be super-stupidity. The psychological damage compounds the intellectual decay. German researchers found AI-edited images could implant false memories of participants’ own lives. This isn’t abstract. During the COVID years, an early AI app called Reface let people swap their faces onto movie characters. My youngest daughter vividly remembers seeing me as Tom Cruise. Years later, she might “remember” me actually riding a motorcycle in leather, the synthetic memory replacing the real absence. Multiply this by every edited photo, every face swap, every “improved” family video. Children growing up now won’t know which memories are authentic. Most people struggle to identify AI images, while a majority worry about being deceived. The cognitive load of questioning every photo, review, and headline breeds a special kind of exhaustion. Political manipulation compounds the crisis: voters received deepfake robocalls mimicking Biden’s voice, the DOJ seized 968 Russian bot accounts spreading disinformation, and one lawsuit revealed 19,411 bot engagements amplifying a single post to 202 million people. This is the true “liar’s dividend” when nothing can be trusted, everything can be believed. QAnon was the rehearsal. AI slop is the main event. The resistance is failing. Wikipedia’s volunteers, that thin line between order and chaos, are overwhelmed and losing. The day Wikipedia’s volunteers quit isn’t the day the internet ends. It’s the day human knowledge ends. Clarkesworld magazine, once a respected science fiction outlet, shut submissions entirely rather than drown in AI stories. Detection tools are going nowhere. OpenAI quietly closed its own detector in 2023, admitting “low accuracy.” Google’s SynthID watermarking claims 99.9 percent effectiveness but can be defeated by any teenager running text through another AI. The irony compounds. When OpenAI launched Sora 2 this week, the most popular video on the platform within 24 hours depicted Altman himself getting caught shoplifting GPUs from Target, clutching the graphics card while saying “Please, I really need this for Sora inference.” The deepfake looked real enough to be security footage. We’ve reached the point where even the architects of these systems can’t control how their own likeness gets weaponised. Altman fed his image into the machine, and the machine immediately turned him into a thief. The Coming DivideWhat emerges is digital apartheid. Those who can afford premium subscriptions for verified human content, publications like 404 Media or Garbage Day that built sustainable human-only models, will access reliable information. These outlets survive by being radically transparent: every article has a named author, every source is verified, every edit tracked. They charge readers directly rather than chasing algorithmic engagement. Or even The Economist. High brow becomes human. Everyone else drowns in free synthetic sewage. The open internet, humanity’s greatest knowledge commons, becomes a toxic swamp only the poor must navigate. This feels like inequality in the ability to perceive reality. It felt weird writing that. We’ll have to face an ancient question with new urgency: what does it mean to be human? Some universities now require oral exams – not innovation, but proof of life, proof you did the thinking. Soon we’ll need new ways to verify humanity itself: not just CAPTCHAs asking if you can spot traffic lights or to highlight the bike, but demonstrations of lived experience, original thought, the messy contradictions that machines can’t yet fake. We need what I call SuperSkills, the uniquely human capabilities that no algorithm can replicate. Not all AI content is slop. Technology has legitimate uses and I am very much an advocate of AI technology used in the right way. But what fills our feeds, shapes our decisions, and teaches our children has become a slop loop, recursively feeding us synthetic waste that we then treat as reality. The slop isn’t going away. The question is whether we’ll develop immunity or drown in it. Cory Doctorow calls this “enshittification,” but even that term feels inadequate. We’re witnessing something unprecedented: a civilisation choosing to poison its own information supply. Silicon Valley promised to organise the world’s information. Instead, they built a machine that feeds on its own exhaust, recursively, forever. And they’re billing us for the privilege. Fun times! The Last Human LibraryThis isn’t a story about broken technology. It’s a story about the slow collapse of civilisation. Journalism is bleeding readers to AI summaries of AI articles (and yes, I’ve clicked them). Science is drowning in fabricated studies that pollute the archive. Markets convulse at synthetic signals like the fake image of a Pentagon explosion that briefly shook Wall Street. And education is producing graduates fluent in presentation but hollow in understanding, able to mimic answers but unable to defend ideas. Does anyone else see it like this? Or is it just me? Imagine AI-generated lesson plans that teachers deploy without review, teaching synthetic facts to students who take them as gospel. This is worse than propaganda – at least propaganda has an author, an intention, a point. Slop has only the simulation of meaning. Who knows what comes next? We might be years from AI peer review AI citing AI. We might see children growing up unable to distinguish which memories are authentic from which are implanted by synthetic media. The complete collapse of observable reality. This is the trajectory we’re on. Somewhere, a grandmother can’t tell if the photos her grandchildren sent are real. A student realises they’ve never actually written anything themselves. A small-town reporter watches their life’s work replaced by machines, describing a town that exists only in datasets from 2019. The day Wikipedia’s volunteers give up is the day human knowledge ends. The Mirror RecursionThe internet was humanity’s mirror. It was messy, chaotic, brilliant, stupid, but fundamentally us and human. Now the mirror reflects only its own reflection, recursively, into infinity. We’re watching the last human library burn while the arsonists sell us fire insurance. Perhaps there’s one bitter consolation: when the internet finally consumes itself completely, when the last human signal drowns in synthetic noise, we might remember what conversation sounded like without a screen between us. We might rediscover the public square, the printed page, the verified physical presence of another human being. We might revert to being human again. But probably not. Probably we’ll just keep scrolling through the slop, mistaking fluency for meaning, engagement for connection and watching the machine talk to itself while pretending we’re still part of the conversation. The tragedy is that we are letting AI use us. There’s a difference between wielding these tools strategically and surrendering our thinking to them. If you don’t know what and why you wrote something because something else did it, it’s game over. You’ve become the slop. We’re not just losing the internet. We’re losing the ability to think in public, to be wrong, to be human. We’re losing the messy first drafts, the bad ideas that lead to good ones, the arguments that sharpen understanding. We’re losing the record of who we were, replacing it with what machines think we should have been. The question isn’t whether we’ll save the internet. It’s whether we’ll remember what we lost when every word is perfect and none of them are ours. Stay Curious - and don’t forget to be amazing, Here are my recommendations for this week:One of the best tools for providing excellent reading material and articles for your week is Refind. It’s a fantastic tool for staying ahead with “brain food” relevant to you and offers serendipitous discoveries of great articles you might have missed. You can dip in and sign up for weekly, daily, or something in between - what’s guaranteed is that the algorithm delivers only the best articles in your chosen area. It’s also free. Highly recommended. Sign up now. Now

Next

Free your newsletters from the inbox: Meco is a distraction-free space for reading newsletters outside the inbox. The app has features designed to supercharge your learning from your favourite writers. Become a more productive reader and cut out the noise with Meco - try the app today If you enjoyed this edition of Box of Amazing, please forward this to a friend. If you quote me or use anything from this newsletter, please link back to it. Box of Amazing is a weekly digest curated by Rahim covering knowledge, society, emerging technology, trends and extraordinary articles, hand-picked to broaden your mind and challenge your thinking. |

The Internet is Eating Itself

WE BRING YOU THE BEST!

05 October

Users_Online! 🟢

Comments

Search This Blog

Random Posts

Most Popular

Save thousands on your next car!

27 November

Companies are looking for Sales Representative

27 November

Phew! This is your problem now.

29 November

💬 Makathandeka Thah commented on a post

27 November

Welcome to Adzuna

27 November

Featured post

⚡World's largest Supercharger site

01 December

Ad Space

Created By SoraTemplates | Distributed By Blogger Templates

0 Comments

VHAVENDA IT SOLUTIONS AND SERVICES WOULD LIKE TO HEAR FROM YOU🫵🏼🫵🏼🫵🏼🫵🏼